Intelligent robots

AI is focused on trying to make machines act intelligently. This goal has brought researchers to use the term “intelligent systems” to refer such machines. Moreover, the field of AI robotics aims to apply AI techniques to augment the capabilities of robots. The term robot represents a mechanical agent that is capable of executing actions in the world. Robots take forms that are most effective for their intended functions, and especially in the past few years with the fast development of many new kinds of robots, the ethical dimension that involves the logic-based decision-making that the robots engage in has seen complex developments.

💬 Definition of intelligent robots

An intelligent robot is characterized by its ability to interact with the physical environment that surrounds it and make deliberate decisions about the ways in which they choose to interact to increase their likelihood of achieving specific goal. For this reason, robots that just passively react to the environment (like thermometers) are usually not considered intelligent.

According to the ISO standards, a robot can be defined as an actuated mechanism programmable in two or more axes with a certain degree of autonomy, moving within its environment to perform intended tasks.

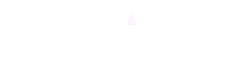

Robots serve a multitude of functions, some of them are reported in the image below.

Generally speaking, a robot is made out of multiple different components:

- Effectors: arms, legs, necks, etc → allow robot to interact with the environment;

- Perception: sensors → to perceive the environment;

- Control: computer processor → to provide computing power;

- Communication: how a robot communicates with other agents;

- Power: to enable the robot’s functions.

We can also distinguish amongst three main environments in which the robots can be placed: ground, air and water. Each of them corresponds, respectively, to what we refer to as

- Unmanned ground vehicles (UGVs)

- Unmanned aerial vehicles (UAVs)

- Unmanned marine vehicles (UMVs)

The areas of artificial intelligence

In AI robotics we can distinguish seven main areas of interest:

- Knowledge representation

- Understanding natural language

- Learning

- Planning and problem-solving

- Inference

- Search

- Vision

An additional eight area that is being considered is collaboration, which has become crucial in recent years.

❓Check your understanding

- Define intelligent robot.

- List the three modalities of autonomous (unmanned) systems.

- List the five components common to all intelligent robots.

- Give four motivations for intelligent robots.

- List the seven areas of artificial intelligence and describe how they contribute to an intelligent robot.

A little bit of history

Robots can be seen in three main areas of interest:

- Tools: when robots are designed to carry out specific tasks and are thus optimized for it but may lack the ability to do anything else;

- Agents: when robots are able to sense changes in the environment and act appropriately to respond to them. This kind of interactions require planning capabilities;

- Joint cognitive systems: when humans and robots collaborate together to complete certain tasks sharing different objectives to reach a common goal.

Initially, with the advent of World War II, robots where initially employed as tools to handle radioactive materials safely. This required the development of mechanical linkages that allowed their mobility.

Once the war ended, the escalating nuclear arms race and interest in nuclear energy prompted further advancements in those kinds of mechanisms, culminating in the invention of industrial manipulators, which where tools capable of performing repetitive tasks with high precision.

This lead to the automation of the manufacturing industry and such robots have played a pivotal role in it ever since. They evolved into more complex robots capable of having multiple degrees of freedom that facilitated difficult movements and operations with minimal human intervention.

Two control methods were used:

- Open loop

- Closed loop

Over the evolution of those factory manipulators, more demanding tasks started to arise, such as navigating complex and unreliable factory environments due to obstacles and interference issues. This started a shifting phase towards treating robots as agents rather than mere tools. This lead to the necessity of agent coordination as well as human-robot interactions.

Due to the increased interactions with humans robots not only necessitate to execute tasks, but to do so in harmony with human actions and expectations. To this extent the designs that were invented for this kind of robots evolve to accommodate and adapt to human limitations.

Moreover, another design principle that was adopted is the swift adaptability to new tasks based on human direction.

Mobile robots

Mobile robots became crucial in those evolution, leading to the construction of autonomous robots that could operate in environments like the Moon or Mars.

💡A little more

The first example of this type of robot was Shakey, which reasoned about its actions. It used programs for perception, world modeling and planning

An example of this kind of autonomous mobile robot is NASA’s Sojourner Mars rover, which performed autonomous navigation and problem solving tasks on Mars. In recent years this kind of approach has been applied to consumer friendly robots like Roomba.

❓Check your understanding

- With respect to the history of robotics, how did the different approaches to intelligence arise?

- Describe the difference between designing a robot as a tool, agent, or joint cognitive system in terms of the use of artificial intelligence.

Automation vs. autonomy

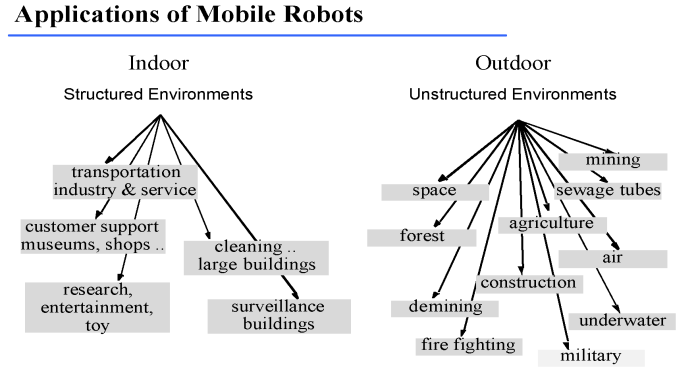

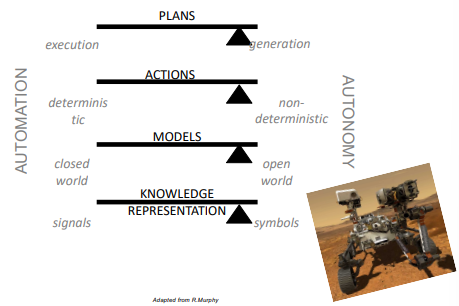

Automation has traditionally aimed at executing pre-planned actions using deterministic algorithms, autonomy, on the other hand, has focused on a robot acting as an agency, adapting its actions based on sensed data in an open world.

To better understand the different between these two pivotal areas of robotics we’ll consider four key aspects:

- Plans: we distinguish plans based on generation vs. execution. A robot that only executes plans that are constructed by humans requires a smaller amount of autonomy compared to an autonomous robot that has to generate and adapt the plan itself;

- Actions: deterministic actions are easy to predict and can be tested in less complicated environments. Non-determinism, on the other hand, poses various challenges and it still represents an open research area;

- Models: robots require a computational representation of the world (a world model). Two main representations are available: open-world and closed-world. In a closed world, everything the robot will ever encounter is already known and can thus be handled using control loops that respond to all expected situations, in an open world there is great incompleteness the the world model and it requires greater flexibility and adaptability. Specifically, the robot needs to take into consideration what changes need to be updated in the world model distinguishing from those that only add unnecessary complexity without adding new useful information.

- Knowledge representations: knowledge can be represented as signals or symbol. Automation responds to raw data or signals, while autonomy (and therefore artificial intelligence) operates over transformed data or symbols.

It is important to note that both human and artificial decision-makers operate within the limits of their available information and are thus bounded in their rationality: their actions remain confined to their programming and cannot exceed predetermined computational limitations.

Often times, autonomy is wrongly associated with a level of freedom that implies unpredictability, but in the context of AI robotics, it is defined much more narrowly taking into account the mechanical tradition of self-governing rather than the moral and ethical one.

While basic autonomous capabilities may closely resemble automation, the distinction becomes clearer as complexity increases. Building a robot focusing on autonomy or automation involves making significant changes to many fundamental aspects of the robot’s structure, such as:

- Programming style: the behaviour of the robot is the result of multiple independent modules interacting, usually written in C++. When building a robot focused on automation, stable control loops are a key aspect of the design, while when building robots focused on autonomy, the bigger development area is artificial intelligence;

- Hardware design: depending on how much power is needed to run the robot, different choices to enhance weight, computational consumption and cost can be made. The environment in which the robot is placed and the kinds of tasks that it will have to complete are other key aspects that are taken into account for the hardware design;

- Kind of failures that are allowed: there are two key types of failures that are usually categorized:

- Functional failures: when the robot’s expectations about the environment are incorrect, which happen when the robot uses an open world model in which real-world conditions significantly diverge from the programmed expectations. One way to mitigate this kind of failures is to design systems that are flexible enough to adjust their operations in real time;

- Human error: about half of the failures that happen in robotics are due to human errors, especially about the overestimation of the robot’s capabilities, assuming that a machine can flawlessly replace a human (substitution mith). This type of problem is known as human out-of-the-loop (OOTL) problem, that arises when humans, distanced from the operational duties due to automation, struggle to quickly intervene and resolve issues when machines malfunction. Solutions to the OOTL problem have involved designing systems that increase the human operator’s situation awareness and facilitate a smoother transfer of control.

💬 Definition automation and autonomy

- Automation is about physically-situated tools performing highly repetitive, pre-planned actions for well-modelled tasks under the close world assumption;

- Autonomy is about physically-situated agents who not only perform actions but can also adapt to the open world where the environment and tasks are not known a priori by generating new plans, monitoring and changing plans, and learning within the constraints of their bounded rationality.

Examples of automation and autonomy are shown below:

Overall, when designing human-machine systems, five key-aspects should be taken into account:

- Fitness: The balance between optimality and resilience;

- Plans: The trade-off between efficiency and thoroughness;

- Impact: The decision between centralized and distributed control;

- Perspectives: The balance between local and global views;

- Responsibility: The consideration between short-term and long-term goals.

❓Check your understanding

- Describe automation and autonomy in terms of a blending of four key aspects: generation or execution of plans, deterministic or non-deterministic actions, closed-world or open-world models, and signals or symbols as the core knowledge representation.

- Discuss the frame problem in terms of the closed-world assumption and open-world assumption.

- Describe bounded rationality and its implications for the design of intelligent robots.

- Compare how taking an automation or autonomy approach impacts the programming style and hardware design of a robot, the functional failures, and human error.

- Define the substitution myth and discuss its ramifications for design.

- List the five trade spaces in human-machine systems (fitness, plans, impact, perspective, and responsibility), and for each trade space give an example of a potential unintended consequence when adding autonomous capabilities.